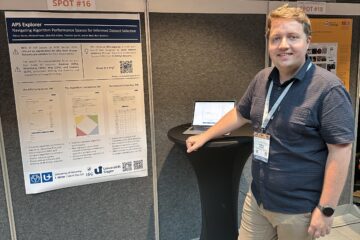

Tobias Vente presents the APS Explorer at ACM Recsys’25: Navigating Algorithm Performance Spaces for Informed Dataset Selection

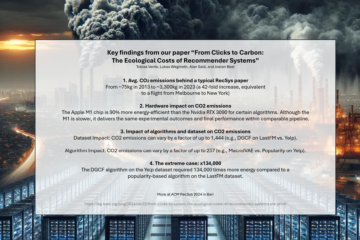

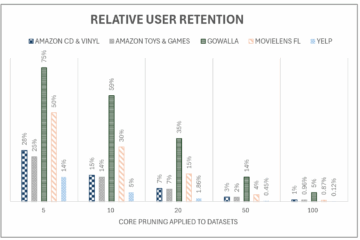

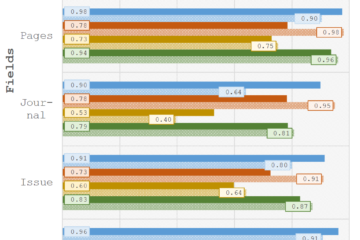

We are excited to share that our PhD student, Tobias Vente, presented our research paper, “APS Explorer: Navigating Algorithm Performance Spaces for Informed Dataset Selection”, at the ACM RecSys 2025 conference held at the O2 Universum Convention Center in Prague, Czechia The Problem: Why RecSys Needs Better Dataset Selection An analysis of all full papers accepted at Read more…