Update 2013-11-11: For some statistical data read On the popularity of reference managers, and their rise and fall

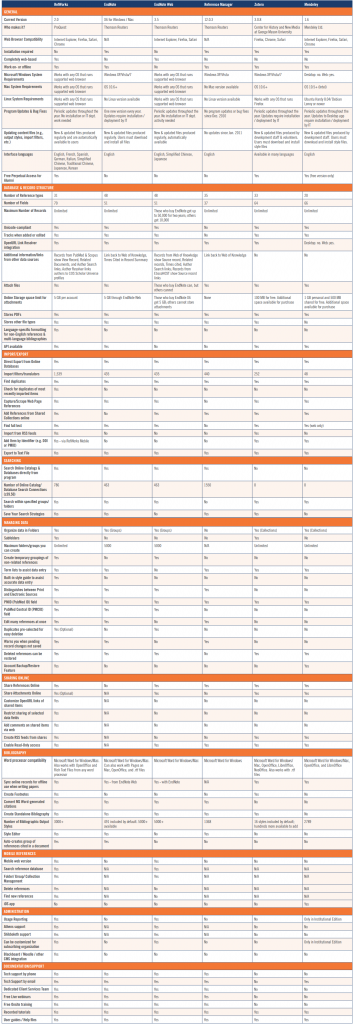

Update 2014-01-15: For a detailed review of Docear and other tools, read Comprehensive Comparison of Reference Managers: Mendeley vs. Zotero vs. Docear

At time of writing these lines, there are 31 reference management tools listed on Wikipedia and there are many attempts to identify the best ones, or even the best one (e.g. here, here, here, here, here, here, here, here, … [1]). Typically, reviewers gather a list of features and analyze which reference managers offer most of these features, and hence are the best ones. Unfortunately, each reviewer has its own preferences about which features are important, and so have you: Are many export formats more important than a mobile version? Is it more important to have metadata extraction for PDF files than an import for bibliographic data from academic search engines? Would a thorough manual be more important than free support? How important is a large number of citation styles? Do you need a Search & Replace function? Do you want to create synonyms for term lists (whatever that means)? …?

Let’s face the truth: it’s impossible to determine which of the hundred potential features you really need.

So how can you find the best reference manager? Recently we had an ironic look at the question what the best reference managers are. Today we want to have a more serious analysis, and propose to first identify the bad reference managers, instead of looking for the very best ones. Then, if the bad references managers are found, it should be easier to identify the best one(s) from the few remaining.

What makes a bad – or evil – reference manager? We believe that there are three no-go ‘features’ that make a reference manager so bad (i.e. so harming in the long run) that you should not use it, even if it possesses all the other features you might need.

1. A “lock-in feature” that prevents you from ever switching to a competitor tool

A reference manager might offer exactly the features you need, but how about in a few years? Maybe your needs are changing, other reference managers are just becoming better than your current tool, or your boss is telling you that you have to use a specific tool. In this case it is crucial that your current reference manager doesn’t lock you in and allows switching to your new favorite reference managers. Otherwise, you will have a serious problem. You might have had the perfect reference manager for the past one or two years. But then you are bound to the now not-so-perfect tool for the rest of your academic life. To being able to switch to another reference manager, your reference manager should be offering at least one of the following three functions (ideally the first one).

- Your data should be stored in a standard format that other reference managers can read

- Your reference manager should be able to export your data in a standard format

- Your reference manager allows direct access to your data, so other developers can write import filters for it.

(more…)