This is the pre-print of our upcoming publication at the 13th ACM Conference on Recommender Systems (RecSys’19).

Joeran Beel, Alan Griffin, and Conor O’Shea. 2019. Darwin & Goliath: A White-Label Recommender-System As-a-Service with Automated Algorithm-Selection. In Proceedings of the 13th ACM Conference on Recommender Systems (RecSys’19). ACM, New York, NY, USA, 2 pages. https://doi.org/10.1145/3298689.3347059.

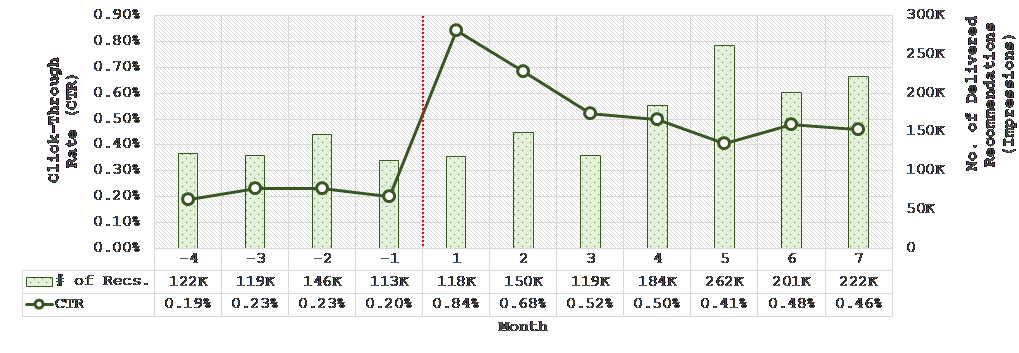

Abstract. Recommendations-as-a-Service (RaaS) ease the process for small and medium-sized enterprises (SMEs) to offer product recommendations to their customers. Current RaaS, however, suffer from a one-size-fits-all concept, i.e. they apply the same recommendation algorithm for all SMEs. We introduce Darwin & Goliath, a RaaS that features multiple recommendation frameworks (Apache Lucene, TensorFlow, …), and identifies the ideal algorithm for each SME automatically. Darwin & Goliath further offers per-instance algorithm selection and a white label feature that allows SMEs to offer a RaaS under their own brand. Since November 2018, Darwin & Goliath has delivered more than 1m recommendations with a CTR = 0.5%.

1 Introduction

Developing and maintaining recommender systems requires significant resources in terms of skills, labor, and infrastructure. Recommendations-as-a-Service (RaaS) and Recommendation APIs decrease the required resources. The terms ´Recommendation API´ and ´RaaS´ are typically used interchangeably, and some companies offer both services at the same time. However, for this paper, a distinction is important to illustrate the focus of Darwin & Goliath. Recommendation APIs allow uploading inventory (items’ metadata) and user actions (purchases, ratings, views, …), and requesting item recommendations via the API. Examples of recommendation APIs include Google’s Recommendations AI, Microsoft’s Azure, and Amazon Personalize. Recommendations-as-a-Service require even fewer resources as they are fully automated systems that are integrated, e.g., via a JavaScript snippet into a web page. Everything else – indexing inventory, requesting recommendations, and rendering recommendations – is done by the RaaS. Examples of RaaS include Recombee and Plista [6].

We present Darwin & Goliath, a recommender-system as-a-service. Darwin & Goliath is inspired by, and to some extent succeeds, Mr. DLib [2]. Mr. DLib is a RaaS that focuses on the academic domain, i.e. providing recommendations for research papers. Darwin & Goliath is not just a new version of Mr. DLib. Darwin & Goliath is a for-profit business start-up that was redesigned and re-implemented from scratch, and handles all kind of items including news, blogs, research articles, and e-commerce products. Most importantly, Darwin & Goliath offers several unique features that set it apart from the competition (details in section 2).

The workflow with Darwin & Goliath is simple and comparable to other RaaS: A small or medium-sized enterprise (SME), which would like to give product recommendations to its customers, integrates a few-line-long JavaScript snippet into its website. This snippet loads the Darwin & Goliath recommendation client. The client requests recommendations from Darwin & Goliath’s API and renders the response (Figure 1, right). Darwin & Goliath does not require SMEs to upload inventory through an API. Instead, SMEs embed their inventory’s metadata into their web pages, and Darwin & Goliath crawls and indexes the inventory. Supported data formats include OpenGraph, Dublin Core, and a proprietary format. Many blogging systems (e.g. WordPress), and e-commerce shopping systems provide out-of-the-box functionality to embed metadata to web pages. Hence, the effort to integrate Darwin & Goliath is just a few minutes for most SMEs.

2 Unique Features

Darwin & Goliath offers four unique features, namely private and public repositories, automated algorithm selection, per-instance algorithm selection, and white labels.

Private and Public Repositories

Most SMEs are interested in recommending items from their inventory only. However, Darwin & Goliath allows SMEs optionally to give recommendations from other SME’s repositories (if these SMEs agree). Darwin & Goliath also indexes additional repositories, such as the CORE corpus with 120 million open-access research articles [7]. Currently, our partner JabRef, a reference management software, recommends items from this corpus [5].

Automated Algorithm Selection

Darwin & Goliath uses multiple recommendation frameworks and automated A/B testing. The architecture comprises of Apache Lucene (content-based filtering); TensorFlow with variations of word and sentence embeddings including FastText, Glove, and Google’s USE; and some proprietary algorithms (most-popular and stereotype). SME’s inventory and usage data are stored in all frameworks, and via A/B testing, the most effective algorithm is identified. This is a significant advantage for SMEs as different recommendation algorithms may perform vastly different for different partners. For instance, the algorithm that performs best for JabRef performs worst for our partner Sowiport and vice versa [3]. Hence, using just a single algorithm for all partners – as other RaaS do – is not optimal.

Per-Instance Algorithm Selection

Darwin & Goliath is not only identifying the overall best algorithm for each SME. Darwin & Goliath applies per-instance algorithm selection, or, as we call it, micro recommendations [1]. For each recommendation request from an SME, Darwin & Goliath predicts, which algorithm will create the best recommendations. This meta-learning is performed with the H2O framework. While there is increasing research in this area [4, 8], Darwin & Goliath is, to the best of our knowledge, the only recommender-system as-a-service that applies meta-learned per-instance algorithm selection in practice.

White Labels

Darwin & Goliath enables third parties to offer recommendations-as-a-service under their own brand name, while Darwin & Goliath provides all services. Such a white-label partner can customize all URLs, text snippets, logos, etc. For instance, instead of integrating the JavaScript client via https://clients.darwingoliath.com/js/getRecs.js, the partner uses https://clients.SomeWhiteLabelURL.com/js/getRecs.js. Currently, we operate two white labels ourselves, and an external partner operates a third white label. The first white label is Mr. DLib [2], which continues to operate as a RaaS for academia. Second, RecSoup.com is a RaaS specifically for online retailers (private beta). Third, ARP-Rec is a RaaS add-on for WordPress blogs (private beta). While all white-labels eventually use Darwin & Goliath, there are differences in some features, and pricing.

3 Usage Statistics

Darwin & Goliath, and its white-labels are used by JabRef and MyVolts and are currently tested with several other websites (private beta). Since its launch in Nov. 2018, Darwin & Goliath delivered 1.25 million recommendations, of which 6,388 were clicked, which equals a click-through rate (CTR) of 0.51% (Figure 2; Months 1 to 7). In the first month of Darwin & Goliath launch, CTR was 0.84%. In subsequent months, CTR decreased to around 0.45% and remains stable since then. Compared to Mr. DLib’s original technology (Figure 2; Months -4 to -1), Darwin & Goliath’ CTR is around twice as high.

REFERENCES

[1] Beel, J. 2017. A Macro/Micro Recommender System for Recommendation Algorithms [Proposal]. ResearchGate (2017).

[2] Beel, J. et al. 2017. Mr. DLib: Recommendations-as-a-service (RaaS) for Academia. 17th ACM/IEEE JCDL Conference (2017), 313–314.

[3] Collins, A. and Beel, J. 2019. Keyphrases vs. Document Embeddings vs. Terms for Recommender Systems: An Online Evaluation. Proceedings of the ACM/IEEE-CS Joint Conference on Digital Libraries (JCDL) (2019).

[4] Cunha, T. et al. 2018. CF4CF: recommending collaborative filtering algorithms using collaborative filtering. Proceedings of the 12th ACM Conference on Recommender Systems (RecSys) (2018), 357–361.

[5] Feyer, S. et al. 2017. Integration of the Scientific Recommender System Mr. DLib into the Reference Manager JabRef. Proceedings of the 39th European Conference on Information Retrieval (ECIR) (2017), 770–774.

[6] Kille, B. et al. 2013. The plista dataset. International News Recommender Systems Workshop and Challenge (2013), 16–23.

[7] Knoth, P. and Zdrahal, Z. 2012. CORE: three access levels to underpin open access. D-Lib Magazine. 18, 11/12 (2012).

[8] Mansoury, M. and Burke, R. 2019. Algorithm selection with librec-auto. Proceedings of The 1st Interdisciplinary Workshop on Algorithm Selection and Meta-Learning in Information Retrieval (AMIR) (2019).

0 Comments