As some of you might know, I am a PhD student and the focus of my research lies on research-paper recommender systems. Now, I am about to finish an extensive literature review of more than 200 research articles on research paper recommender systems. My colleagues and I summarized the findings in this 43-page preprint. The preprint is in an early stage, and we need to double check some numbers, improve grammar etc. but we would like to share the article anyway. If you are interested in the topic of research paper recommender system, it hopefully will give you a good overview of that field. The review is also quite critical and should give you some good ideas about the current problems and interesting directions for further research.

If you read the preprint, and find any errors, or if you have suggestions how to improve the survey, please let us know and send us an email. If you would be interested in proof-reading the article, let us know, and we will send you the MS-Word document

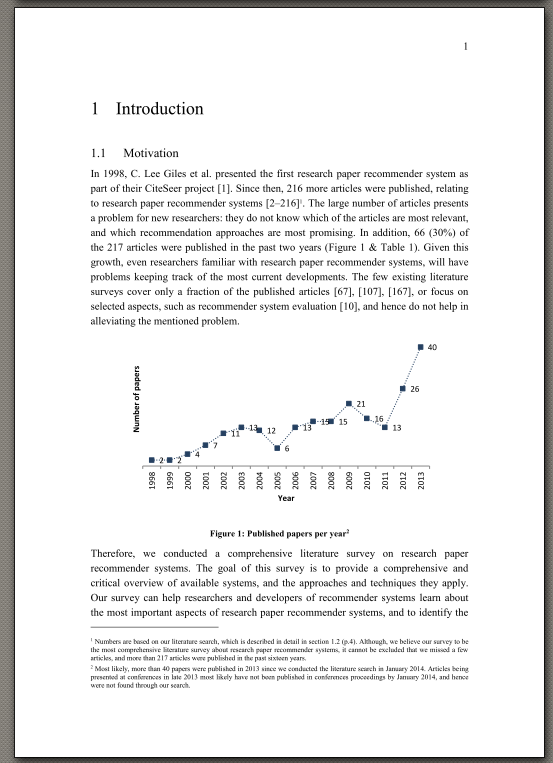

Abstract. Between 1998 and 2013, more than 300 researchers published more than 200 articles in the field of research paper recommender systems. We reviewed these articles and found that content based filtering was the predominantly applied recommendation concept (53%). Collaborative filtering was only applied by 12% of the reviewed approaches, and graph based recommendations by 7%. Other recommendation concepts included stereotyping, item-centric recommendations and hybrid recommendations. The content based filtering approaches mainly utilized papers that the users had authored, tagged, browsed, or downloaded. TF-IDF was the most applied weighting scheme. Stop-words were removed only by 31% of the approaches, stemming was applied by 24%. Aside from simple terms, also n-grams, topics, and citations were utilized. Our review revealed some serious limitations of the current research. First, it remains unclear which recommendation concepts and techniques are most promising. Different papers reported different results on, for instance, the performance of content based and collaborative filtering. Sometimes content based filtering performed better than collaborative filtering and sometimes it was exactly the opposite. We identified three potential reasons for the ambiguity of the results. First, many of the evaluations were inadequate, for instance, with strongly pruned datasets, few participants in user studies, and no appropriate baselines. Second, most authors provided sparse information on their algorithms, which makes it difficult to re-implement the approaches or to analyze why evaluations might have provided different results. Third, we speculated that there is no simple answer to finding the most promising approaches and minor variations in datasets, algorithms, or user population inevitable lead to strong variations in the performance of the approaches. A second limitation related to the fact that many authors neglected factors beyond accuracy, for example overall user satisfaction and satisfaction of developers. In addition, the user modeling process was widely neglected. 79% of the approaches let their users provide some keywords, text snippets or a single paper as input, and did not infer information automatically. Only for 11% of the approaches, information on runtime was provided. Finally, it seems that much of the research was conducted in the ivory tower. Barely any of the research had an impact on the research paper recommender systems in practice, which mostly use very simple recommendation approaches. We also identified a lack of authorities and persistence: 67% of the authors authored only a single paper, and there was barely any cooperation among different co-author groups. We conclude that several actions need to be taken to improve the situation. Among others, a common evaluation framework is needed, a discussion about which information to provide in research papers, a stronger focus on non-accuracy aspects and user modeling, a platform for researchers to exchange information, and an open-source framework that bundles the available recommendation approaches.

4 Comments

Manish Jaiswal · 11th May 2015 at 12:40

Thanks for sharing this article.I am PhD scholer in the field of recommender system and in survey phase. once i read the paper which u shared, I will put commennts if requuired.

Thanks

G Hemalatha · 23rd September 2014 at 14:57

Thanks for the article. Iam a Ph D scholar doing research on recommender systems. The information shared is mush useful to me. After studying I’ll send the comments and reviews at the earliest. Kindly send the word document to the above said e mail id at your earliest convenience.

tedus · 12th May 2014 at 08:36

Got a question for you:

I used Recently until it was recently (yeah i know – double) shut down (for some reason i did not dig into that much).

Since then i am looking at Pubchase – as i personaly like the “stand alone” recomender – where you say: i like these x Articles – show me some more – i rate them as relevant/not relevant/no opinion – and you go on showing me new articles. All that available for several topics.

Also i use Alerts to inform about new findings.

I also use researchgate etc – but only for networking and browsing papaers.

Do you know about these or a good overview of existing recommander systems??

I read – but never could find out how to – that there is also the possibility for alerts on ne citations of an article of choice. I know this from scifinder (which i do not use) and also there is the google scholar citation feature.

For your review – found no errors while browsing through – but a suggestion: clickable Links (like in the footnotes) and clickable citations (leading to the bibliography) would be nice. Dont know if you use latex (does not look like actually) – there this would be easy and with minor work to integrate. Anyway – keep it up.

Joeran [Docear] · 24th September 2014 at 06:57

thx for the offer. however, we already submitted the article to a journal and are awaiting the feedback. depending on the feedback, i might get back to your offer and send you an email.