We published a manuscript on arXiv about the first living lab for scholarly recommender systems. This lab allows recommender-system researchers to conduct online evaluations of their novel algorithms for scholarly recommendations, i.e., research papers, citations, conferences, research grants etc. Recommendations are delivered through the living lab´s API in platforms such as reference management software and digital libraries. The living lab is built on top of the recommender system as-a-service Mr. DLib. Current partners are the reference management software JabRef and the CORE research team. We present the architecture of Mr. DLib’s living lab as well as usage statistics on the first ten months of operating it. During this time, 970,517 recommendations were delivered with a mean click-through rate of 0.22%.

1 Introduction

‘Living labs’ for recommender systems enable researchers to evaluate their recommendation algorithms with real users in realistic scenarios. Such living labs – sometimes also called ‘Evaluations-as-a-Service’ [14, 16] – are usually built on top of production recommender systems in real-world platforms such as news websites. Via an API, external researchers ´plug-in´ their experimental recommender systems to the living lab. When recommendations for users of the platform are needed, the living lab sends a request to the researcher´s experimental recommender system. This system then returns a list of recommendations that are displayed to the user. The user´s actions (clicks, downloads, purchases etc.) are logged and can be used to evaluate the algorithms´ effectiveness.

Living labs are available for many recommender-system domains, particularly news [11, 15, 19], and they attracted dedicated workshops [1]. There is also work on living labs in the context of digital libraries and search and browsing behavior [12]. However, to the best of our knowledge, there are no living labs for scholarly recommendations, i.e., recommendations for research articles [24, 29], citations [18], conferences [10], reviewers [23], quotes [28], research grants, or collaborators [21]. Consequently, researchers in the field of scholarly recommender systems predominately rely on offline evaluations [5], which tend to be poor predictors of how algorithms will perform in a production recommender system [7, 25, 27].

In this paper, we present the first living lab for scholarly recommendations, built on top of Mr. DLib, a scholarly recommendations-as-a-service provider [2, 6]. The main contribution of this living lab is to provide an environment that allows researchers in the field of scholarly recommendations to evaluate their novel recommendation algorithms with real users in addition to, or instead of, conducting offline evaluations.

2 Mr. DLib’s Scholarly Living Lab

Mr. DLib’s living lab is open for two types of partners. First, platform operators, who want to provide their users with recommendations for research articles. Second, research partners, who want to evaluate their novel research-paper recommendation algorithms with real users. Mr. DLib acts as an intermediate between these partners. Currently, the platform partner of Mr. DLib is the reference-management software JabRef [13, 22], and the research partner is CORE [17, 20, 26]. Mr. DLib operates its own internal recommendation engine, which applies content-based filtering with terms, key-phrases, and word embeddings as well as stereotype and most-popular recommendations [3, 4]. Thus Mr. DLib´s internal recommendation engine establishes a baseline for research partners to compare their novel algorithms against.

The workflow of Mr. DLib´s living lab is illustrated in Figure 1. When a JabRef user requests recommendations for a publication in his or her personal library, by selecting the “Related Articles” tab, a request comprising the source article´s title is sent to Mr. DLib´s API. Mr. DLib´s A/B engine randomly forwards the request either to Mr. DLib´s internal recommender system or CORE´s recommender system. The list of recommendations is returned to and displayed in JabRef. When a user clicks a recommendation, a logging event it sent to Mr. DLib for evaluation purposes.

Mr. DLib´s living lab is open to any research partners whose experimental recommender system recommends scholarly items; is available through a REST API; accepts a string as input (typically a source article’s title); and returns a list of related-articles including URLs to web pages on which the recommended articles can be downloaded, preferably open access. Also, recommendations must be returned within less than 2 seconds. As of now, Mr. DLib´s team will manually integrate the researcher´s recommendation API. In the long run, we plan to standardize the data format that partners must provide to ease integration.

3 Usage Statistics

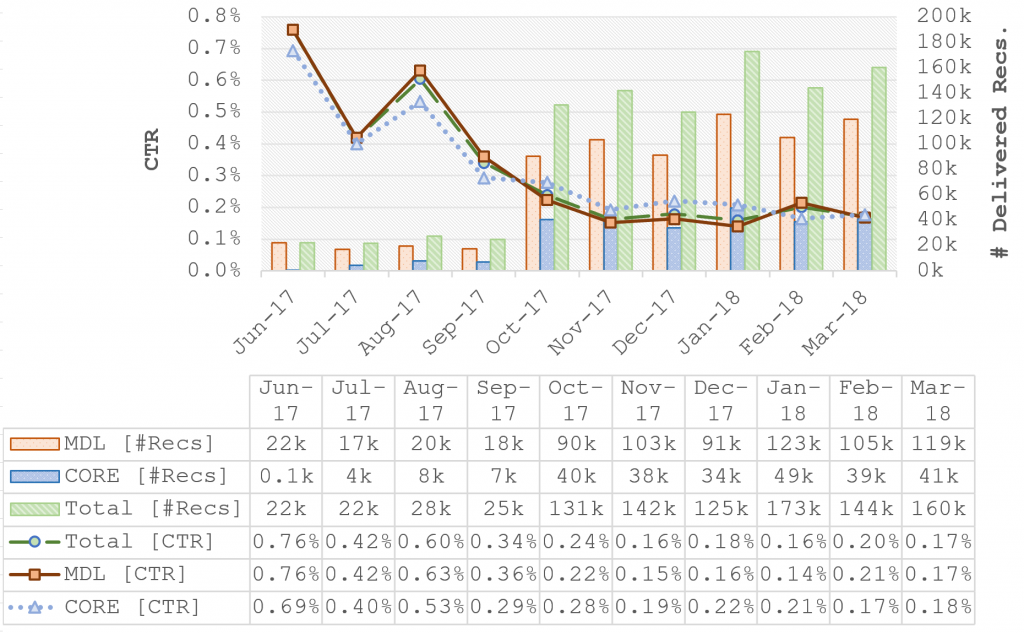

Mr. DLib´s living lab was launched in June 2017 and was integrated first in a beta version of JabRef. During the beta phase (until September 2017), JabRef sent around 4,200 requests to Mr. DLib per month (Figure 2). For each request, Mr. DLib returned typically 6 recommendations (25k recommendations in total), whereas between 20% to 30% of the recommendations were generated by CORE, and the remaining by Mr. DLib´s internal recommendation engine. Click-through rate on the recommendations decreased from 0.76% in June to 0.34% in September (Figure 2). This observation correlates with click-through rates on Mr. DLib´s other partner platforms that do not participate in the living lab [3, 4], as well as with click-through rate in other research-paper recommender systems [5, 8]. We can only speculate why click-through rate decreases over time. Potentially, users are more curious in the beginning and click recommendations more often. Maybe, recommendations become worse over time, or are simply not as good as expected and hence users lose interest.

After the beta phase, i.e. from October 2017 on, the number of delivered recommendations increased to 150k per month, again with 20% to 30% of the recommendations generated by CORE. The overall click-through rate decreased to around 0.18% and remained stable between October 2017 and March 2018.

Interestingly, click-through rates for both CORE and Mr. DLib´s internal recommendation engine are almost identical over the 10 months data collection period. Both systems mostly use Apache Lucene for their recommendation engine, yet there are notable differences in the algorithms and document corpora. We will not elaborate further on the implementations but refer the interested reader to [2–4, 26]. The interesting point here is that two separately implemented recommender systems perform almost identically. It is also interesting that the click-through rate of around 0.18% in the reference management software JabRef is quite similar to the click-through rate in the social-science repository Sowiport [4].

All data of Mr. DLib is available in Mr. DLib’s Related-Article Recommender System Dataset (RARD II) [9]. This data can be used to replicate our calculations, and perform additional analyses.

Figure 2: Click-through rate (CTR) and # of delivered recommendation in JabRef for Mr. DLib’s (MDL) and CORE’s recommendation engine and in total

4 Future Work

We plan to add more partners on both sides – platform partners who provide access to real users, and research partners who evaluate their novel algorithms via the living lab. We also aim for enabling personalized recommendations in addition to the current focus on item similarity, i.e. related-article recommendations. We will also enable the recommendation of other scholarly items such as research grants, or research collaborators. We also plan to develop a more automatic process for the integration of partners, with standard protocols and data formats, and pre-implemented clients, to ease the process.

Acknowledgements

This publication has emanated from research conducted with the financial support of Science Foundation Ireland (SFI) under Grant Number 13/RC/2106. We are further grateful for the support received by Samuel Pearce, Siddharth Dinesh, Sophie Siebert, and Stefan Feyer.

References

[1] Balog, K., Elsweiler, D., Kanoulas, E., Kelly, L. and Smucker, M.D. 2014. Report on the CIKM workshop on living labs for information retrieval evaluation. ACM SIGIR Forum (2014), 21–28.

[2] Beel, J., Aizawa, A., Breitinger, C. and Gipp, B. 2017. Mr. DLib: Recommendations-as-a-Service (RaaS) for Academia. Proceedings of the ACM/IEEE-CS Joint Conference on Digital Libraries (JCDL) (2017), 1–2.

[3] Beel, J. and Dinesh, S. 2017. Real-World Recommender Systems for Academia: The Gain and Pain in Developing, Operating, and Researching them. Proceedings of the Fifth Workshop on Bibliometric-enhanced Information Retrieval (BIR) co-located with the 39th European Conference on Information Retrieval (ECIR 2017) (2017), 6–17.

[4] Beel, J., Dinesh, S., Mayr, P., Carevic, Z. and Raghvendra, J. 2017. Stereotype and Most-Popular Recommendations in the Digital Library Sowiport. Proceedings of the 15th International Symposium of Information Science (ISI) (2017).

[5] Beel, J., Gipp, B., Langer, S. and Breitinger, C. 2016. Research Paper Recommender Systems: A Literature Survey. International Journal on Digital Libraries. 4 (2016), 305–338.

[6] Beel, J., Gipp, B., Langer, S., Genzmehr, M., Wilde, E., Nürnberger, A. and Pitman, J. 2011. Introducing Mr. DLib, a Machine-readable Digital Library. Proceedings of the 11th ACM/IEEE Joint Conference on Digital Libraries (JCDL‘11) (2011), 463–464.

[7] Beel, J. and Langer, S. 2015. A Comparison of Offline Evaluations, Online Evaluations, and User Studies in the Context of Research-Paper Recommender Systems. Proceedings of the 19th International Conference on Theory and Practice of Digital Libraries (TPDL) (2015), 153–168.

[8] Beel, J., Langer, S., Gipp, B. and Nürnberger, A. 2014. The Architecture and Datasets of Docear’s Research Paper Recommender System. D-Lib Magazine. 20, 11/12 (2014).

[9] Beel, J., Smyth, B. and Collins, A. 2018. RARD II: The 2nd Related-Article Recommendation Dataset. arXiv (2018).

[10] Beierle, F., Tan, J. and Grunert, K. 2016. Analyzing social relations for recommending academic conferences. Proceedings of the 8th ACM International Workshop on Hot Topics in Planet-scale mObile computing and online Social neTworking (2016), 37–42.

[11] Brodt, T. and Hopfgartner, F. 2014. Shedding light on a living lab: the CLEF NEWSREEL open recommendation platform. Proceedings of the 5th Information Interaction in Context Symposium (2014), 223–226.

[12] Carevic, Z., Schüller, S., Mayr, P. and Fuhr, N. 2018. Contextualised Browsing in a Digital Library’s Living Lab. Proceedings of the 18th ACM/IEEE on Joint Conference on Digital Libraries (Fort Worth, Texas, USA, 2018), 89–98.

[13] Feyer, S., Siebert, S., Gipp, B., Aizawa, A. and Beel, J. 2017. Integration of the Scientific Recommender System Mr. DLib into the Reference Manager JabRef. Proceedings of the 39th European Conference on Information Retrieval (ECIR) (2017).

[14] Hanbury, A., Müller, H., Balog, K., Brodt, T., Cormack, G.V., Eggel, I., Gollub, T., Hopfgartner, F., Kalpathy-Cramer, J., Kando, N. and others 2015. Evaluation-as-a-Service: Overview and outlook. arXiv preprint arXiv:1512.07454. (2015).

[15] Hopfgartner, F., Brodt, T., Seiler, J., Kille, B., Lommatzsch, A., Larson, M., Turrin, R. and Serény, A. 2016. Benchmarking news recommendations: The clef newsreel use case. ACM SIGIR Forum (2016), 129–136.

[16] Hopfgartner, F., Hanbury, A., Müller, H., Kando, N., Mercer, S., Kalpathy-Cramer, J., Potthast, M., Gollub, T., Krithara, A., Lin, J. and others 2015. Report on the Evaluation-as-a-Service (EaaS) expert workshop. ACM SIGIR Forum (2015), 57–65.

[17] Hristakeva, M., Kershaw, D., Rossetti, M., Knoth, P., Pettit, B., Vargas, S. and Jack, K. 2017. Building recommender systems for scholarly information. Proceedings of the 1st Workshop on Scholarly Web Mining (2017), 25–32.

[18] Jia, H. and Saule, E. 2017. An Analysis of Citation Recommender Systems: Beyond the Obvious. Proceedings of the 2017 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining 2017 (2017), 216–223.

[19] Kille, B., Lommatzsch, A., Gebremeskel, G.G., Hopfgartner, F., Larson, M., Seiler, J., Malagoli, D., Serény, A., Brodt, T. and De Vries, A.P. 2016. Overview of newsreel’16: Multi-dimensional evaluation of real-time stream-recommendation algorithms. International Conference of the Cross-Language Evaluation Forum for European Languages (2016), 311–331.

[20] Knoth, P., Anastasiou, L., Charalampous, A., Cancellieri, M., Pearce, S., Pontika, N. and Bayer, V. 2017. Towards effective research recommender systems for repositories. Proceedings of the Open Repositories Conference (2017).

[21] Kong, X., Jiang, H., Wang, W., Bekele, T.M., Xu, Z. and Wang, M. 2017. Exploring dynamic research interest and academic influence for scientific collaborator recommendation. Scientometrics. 113, 1 (2017), 369–385.

[22] Kopp, O., Breitenbücher, U. and Müller, T. 2018. CloudRef – Towards Collaborative Reference Management in the Cloud. Proceedings of the 10th Central European Workshop on Services and their Composition (2018).

[23] Kou, N.M., Mamoulis, N., Li, Y., Li, Y., Gong, Z. and others 2015. A topic-based reviewer assignment system. Proceedings of the VLDB Endowment. 8, 12 (2015), 1852–1855.

[24] Li, S., Brusilovsky, P., Su, S. and Cheng, X. 2018. Conference Paper Recommendation for Academic Conferences. IEEE Access. 6, (2018), 17153–17164.

[25] Moreira, G. de S.P., Souza, G.A. de and Cunha, A.M. da 2015. Comparing Offline and Online Recommender System Evaluations on Long-tail Distributions. Proceedings of the ACM Recommender Systems Conference RecSys (2015).

[26] Pontika, N., Anastasiou, L., Charalampous, A., Cancellieri, M., Pearce, S. and Knoth, P. 2017. CORE Recommender: a plug in suggesting open access content. http://hdl.handle.net/1842/23359. (2017).

[27] Rossetti, M., Stella, F. and Zanker, M. 2016. Contrasting Offline and Online Results when Evaluating Recommendation Algorithms. Proceedings of the 10th ACM Conference on Recommender Systems (Boston, Massachusetts, USA, 2016), 31–34.

[28] Tan, J., Wan, X., Liu, H. and Xiao, J. 2018. QuoteRec: Toward Quote Recommendation for Writing. ACM Transactions on Information Systems (TOIS). 36, 3 (2018), 34.

[29] Vargas, S., Hristakeva, M. and Jack, K. 2016. Mendeley: Recommendations for Researchers. Proceedings of the 10th ACM Conference on Recommender Systems (Boston, Massachusetts, USA, 2016), 365–365.

0 Comments